Addressing Colorism in AI

- Colorism is rooted in colonialism and caste systems and persists in media, digital products, and AI

- Colorism creates and perpetuates inequities

- Diverse data and images, data labeling, transparency, diverse teams, monitoring, bias mitigation techniques, user testing, and ethical reviews can help break the bias

Colorism, a form of discrimination based on skin color, has a long and complex history. It can be traced back to colonialism and the European colonization of various parts of the world. During this period, the European colonizers imposed their standards of beauty and superiority, which often favored lighter skin tones. This led to the marginalization and discrimination of individuals with darker skin tones.

Colorism can also be attributed to the historical caste systems where skin color has been used as a marker of social status. The influence of these caste systems still persists in modern society, perpetuating discriminatory attitudes towards darker-skinned individuals.

In addition to historical factors, colorism is also influenced by societal beauty standards and media representation. The prevalence of Eurocentric beauty ideals in the media, AI, and digital products often leads to the marginalization of individuals with darker skin tones, perpetuating the cycle of colorism.

Where We See Colorism

Colorism manifests itself in various forms, both overt and subtle. Overt forms of colorism include explicit discrimination and bias based on skin color. This can be seen in situations where individuals with lighter skin tones are given preferential treatment and opportunities compared to those with darker skin tones.

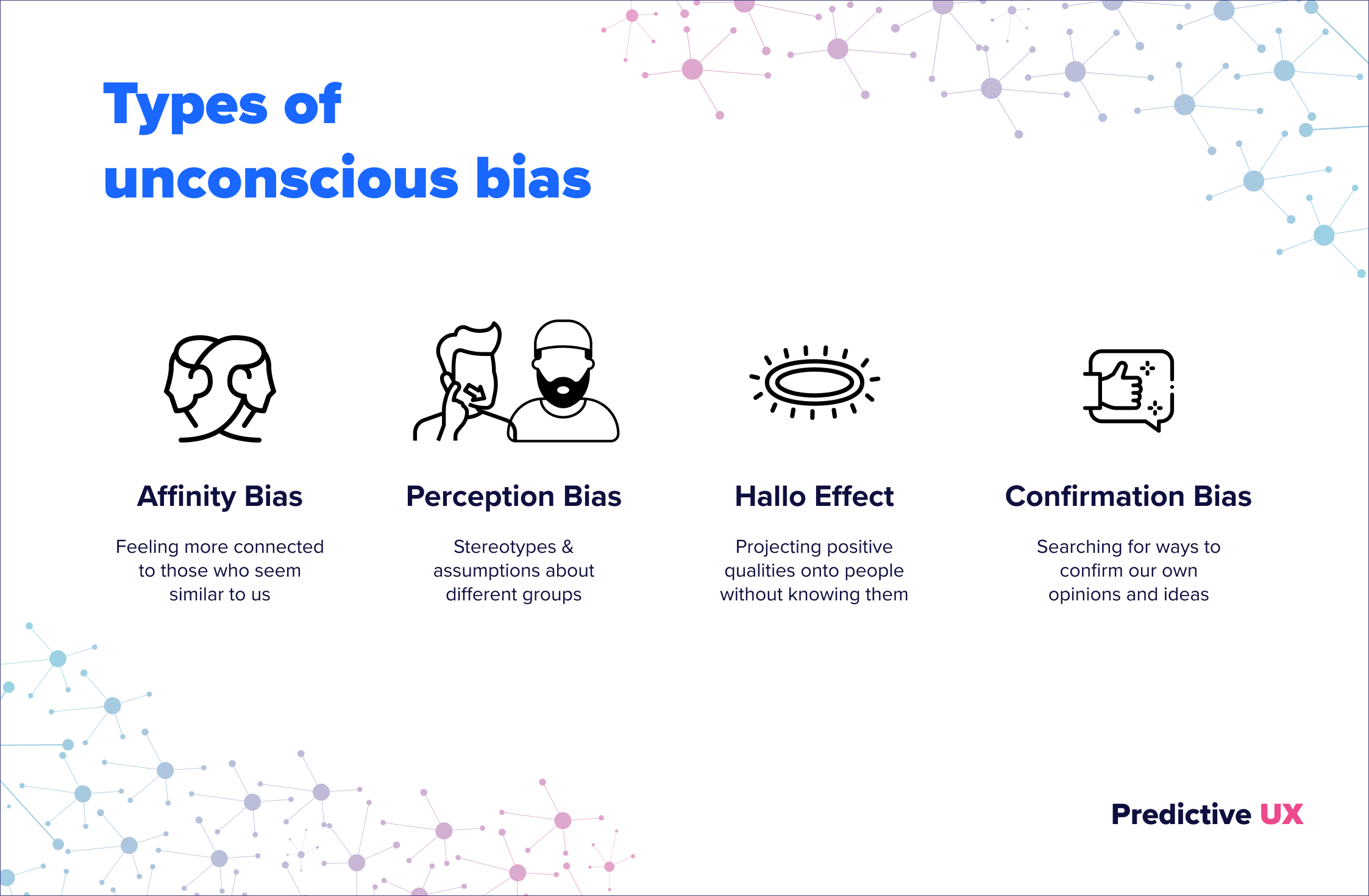

Subtle forms of colorism include unconscious biases and stereotypes that influence our perceptions and judgments. These biases can affect product design, datasets, hiring decisions, promotions, and overall opportunities in various industries, including the tech industry.

It is important to recognize that colorism can also occur within communities of the same racial or ethnic background. Intra-racial colorism refers to discrimination based on varying degrees of skin color within a particular racial or ethnic group. This form of colorism highlights the complexity of the issue and the need for intersectional approaches in addressing it.

Colorism has wide-ranging effects; it can lead to low self-esteem, body image issues, and mental health problems among those who experience discrimination based on their skin color. The constant reinforcement of Eurocentric beauty standards in AI and digital products can create a sense of inadequacy and perpetuate feelings of inferiority.

Moreover, colorism has economic implications, as individuals with darker skin tones may face barriers in education, employment, and upward mobility - especially as AI is being used in hiring decisions. This perpetuates social and economic inequalities, further marginalizing certain groups within society.

The effects of colorism can also be seen on a larger scale, impacting representation and diversity in various industries. In the tech industry, for example, the underrepresentation of individuals with darker skin tones can lead to biased algorithms and AI systems that reinforce discriminatory practices. This highlights the need for diversity and inclusion in tech to ensure equitable outcomes.

Impact of Colorism in AI and Product Design

Colorism has significant implications for AI and product design. AI algorithms are trained on vast amounts of data, which often reflects societal biases, including colorism. If the training data is biased towards lighter skin tones, it can result in AI systems that perpetuate discriminatory practices.

Product design is also impacted by colorism. For example, there is a very limited availability of photos of people with darker skin tones living happy and successful lives. This makes it challenging for product designers to find the images to avoid colorism. In short, we need more access to diversity in stock photos. We recommend using https://nappy.co/ to find a wonderful selection of "Beautiful photos of Black and Brown people, for free."

Addressing colorism in AI requires a concerted effort to diversify datasets, challenge biases, and promote inclusivity. It is essential to involve individuals from diverse backgrounds in the design and development process to ensure equitable outcomes and mitigate the impact of colorism.

Addressing Colorism in AI: Steps Towards Equality

Addressing colorism requires a multifaceted approach involving education, policy changes, and continuous improvement. It is crucial to educate individuals about the harmful effects of colorism and promote awareness and empathy.

Avoiding colorism in AI is crucial for creating fair and inclusive technology.

At Predictive UX, we take these key steps during our AI projects, and encourage you to do the same in your AI projects:

- Diverse Data Collection: Ensure that the training data used for your AI models represent a diverse range of skin tones, ethnicities, and backgrounds. The data should reflect the real-world diversity of the population to minimize biases.

- Balanced Labeling: When labeling data, avoid associating certain skin tones with negative or positive attributes. Labels should be neutral and not reinforce stereotypes. You can get help from taxonomists, linguists, and inclusion experts when labeling to ensure a balanced and fair approach.

- Fair Representation: Verify that your AI models provide equitable results for all skin tones. We do this by regularly testing and evaluating a model's performance across different demographics to identify and rectify biases. This should be done using automated approaches and manual testing to ensure proper oversight.

- Algorithm Transparency: Make your AI algorithms transparent and explainable. Users should understand how the AI makes decisions and be able to question and challenge the outcomes. If you're using a large language model (LLM) and fine-tune it for specific tasks, carefully document and disclose the fine-tuning process. Transparency in fine-tuning helps users understand any potential biases introduced during this phase. You should also document any data preprocessing steps, including data cleaning, filtering, and transformations. Explain how these steps affect the model's behavior.

- Inclusive Development Teams: Build diverse teams that include individuals from various backgrounds and perspectives. A diverse team can help identify and rectify biases in AI development. Work with your HR department to ensure diverse recruiting if you're in a larger enterprise. If you're a smaller company, be sure to recruit from various schools and job sites that promote diversity.

- Ethical Guidelines: Develop and adhere to ethical guidelines that explicitly address issues like fairness, bias, and discrimination in AI systems. These guidelines should be a part of your governance charter but also need to be visible and socialized among your employees, especially the tech and design teams who are building your AI applications.

- Red Teaming and Testing for LLMs: Leverage external red-teaming to identify AI bias, discrimination, and privacy. Continuous testing by external groups with different backgrounds and expertise can help you mitigate unsafe or discriminatory behavior.

- Bias Mitigation Techniques: Implement techniques like re-sampling, re-weighting, or adversarial training to mitigate bias during the AI training process.

- Auditing and Assessments: Consider audits and assessments by independent experts, your own employees, and your users to assess the fairness and inclusivity of your AI systems.

- Education: Educate users and stakeholders about the potential biases in AI systems and the steps taken to address them. Encourage everyone to report any issues they encounter.

- Governance and Accountability: Establish governance with clear lines of accountability within your organization for addressing bias and ensuring fair AI outcomes. This should include the establishment of ethics review boards or committees to oversee AI projects and assess their fairness and ethical implications.

Need help addressing and mitigating bias in your organization? Predictive UX has developed a program that helps companies create inclusive tech teams, design and build inclusive AI products, and establish governance for continuous monitoring and improvement. Learn more.

.jpg)