- xAI launched the Beta version of Grok, an AI chatbot, to compete with OpenAI

- Musk says it gives more up-to-date answers than ChatGPT

- It's been trained for 2 months, but the website says they built it over 4 months

- xAI is testing & grading the Grok-1 LLM against other LLMs

- The cost will be $16/month, but it's still in Beta

- Bias and safety remain a top concern as X continues to trend more right-wing

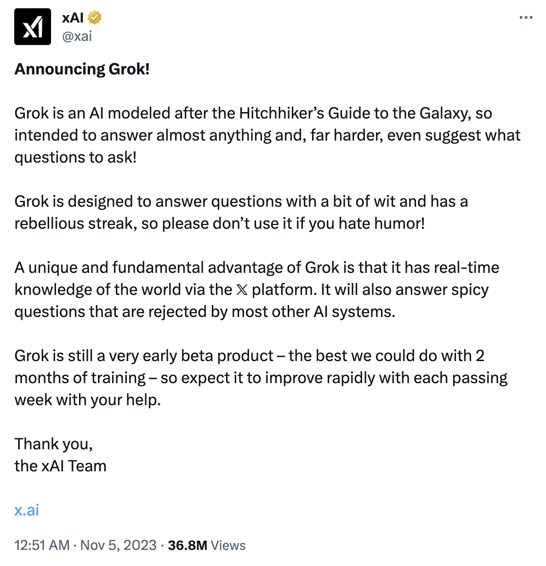

xAI has unveiled Grok, it's new AI chatbot. This is going to spark a lot of interesting conversation, but the ones that are of greatest interest to me are how it was built and how safe it is for people to use.

Musk described Grok as "the best that currently exists," no surprise there.

What is Grok?

Grok AI, is an artificial intelligence chatbot. The name "Grok" means to understand empathetically - what a nice concept for AI. It was coined by Robert A. Heinlein, a science fiction writer, in his book "Stranger in a Strange Land."

With Grok, Musk aims to rival ChatGPT and push the boundaries of AI conversational systems. He said Grok is better at complex queries and math, whereas ChatGPT is better at conversational AI (for now). xAI announced Grok, saying it's "modeled after Hitchhiker's Guide to the Galaxy," and intended to answer almost anything:

xAI said that by creating and improving Grok, they aim to:

- "Gather feedback and ensure we are building AI tools that maximally benefit all of humanity. We believe that it is important to design AI tools that are useful to people of all backgrounds and political views. We also want empower our users with our AI tools, subject to the law. Our goal with Grok is to explore and demonstrate this approach in public."

I have an issue with this: how can they ensure inclusion here when the training data is coming from X?

- "Empower research and innovation: We want Grok to serve as a powerful research assistant for anyone, helping them to quickly access relevant information, process data, and come up with new ideas."

This is the promise of LLMs in general. It's important to note that it is a research assistant. It will still need the right kinds of prompts to return "new" ideas.

Tech Stack

Grok is built on Kubernetes, Rust, and JAX as a set of custom distributed systems that can automatically detect and handle GPU failures, an issue they encountered frequently during data model training. They're all in on Rust, citing it as offering a flexible codebase that is easily refactored and can run for months with minimal supervision.

Next up, they're building accelerators for internet-scale data pipelines. I can't wait to see what the next release has in store and how well it performs, especially from a bias, safety, and risk perspective. I'd love the chance to score it against the AI product index we've created in Design Well.

Features and Capabilities of Grok

Grok does have some features that I am eager to try out, such as:

- Rebellious Streak: Grok is supposedly going to be able to give more cheeky responses than ChatGPT. Should make for some interesting papers turned in by students using the platform to do their homework.

- Answers almost anything: Grok was designed to be able to answer almost anything. However, the big question is how accurately, to what depth, and how biased.

Questions I have about it:

- What will they do with the data and information collected via prompts it is fed?

- Will there be transparency around what data the model was trained on and with each subsequent release?

- Will it be available in any other language besides English? How will it perform in other languages?

- What happens if someone puts PII into the chat interface?

- Will they ban people from the platform as Musk has done in the past?

Musk said he believes today’s AI makers are bending too far toward “politically correct” systems and that xAI’s mission is to create AI for people of all backgrounds and political views.

Testing

You can find some details on the xAI website about how they went from Grok-0 to Grok-1, the LLM engine behind Grok. I'm looking forward to learning more about their approach as they make information available.

Their site says they trained Grok-0 on 33 billion parameters on half its training data and then made "significant" improvements and rolled out Grok-1 which tested out with 63.2% on the HumanEval coding task and 73% on MMLU (Measuring Massive Multitask Language Understanding).

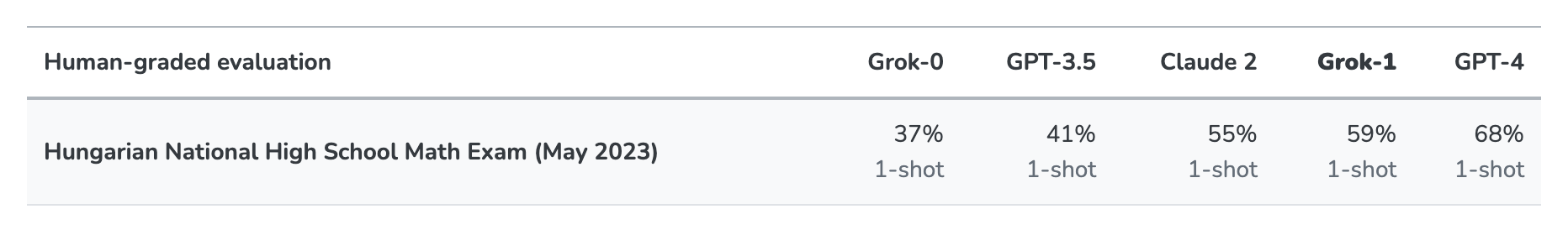

xAI's website says Grok-1 surpassed other models when tested against standard machine learning benchmarks, and to be sure they were doing better, they hand graded Grok-1's performance against the other LLMs with only GPT-4 outperforming Grok-1:

Pricing and Availability

Grok is currently in Beta, so not widely available, but when it rolls out formally, it will have a premium subscription for $16 per month.

That's more than ChatGPT 3.5, which is free, and $4 more for ChatGPT4. OpenAI also has an enterprise level of ChatGPT and an API so that you can integrate their product into your own.

I'm curious how many enterprise organizations would opt for Grok over ChatGPT, Claude or one of the other large language models on the market today.

Safety & Bias

The first person I told about Grok today said, "AI previously trained on Twitter has trended very right-wing and Nazi-esque, and X has since gotten even more conservative." They were referencing an event in 2018 where a chatbot designed by Mircosoft to use Twitter data "quickly turned into a racist, sex-crazed neo-Nazi." But they followed up with me afterward with a more recent article from October where The Washington Post outlines how X has consistently trended right wing.

It is well known that X has lost a lot of revenue due to the major changes made by Musk. Could it be that the release of Grok is designed to prop up X financially?

Either way, I was personally delighted to see that the xAI team is being advised by Dan Hendrycks, who serves as the Director for the Center of AI Safety, but disappointed that there aren't more folks on Dan's team - at least that I could see.

I applied for access to Grok, but don't have it yet. As soon as I do, I plan to test it against the AI product index we created to measure fair, safe, inclusive, and equitable AI. I won't be able to conduct a full assessment without asking their team some questions about how they govern their data, but I hope to do as much as I can and would love to come away surprised (in a good way) by the results.

References:

- https://x.ai/

- https://twitter.com/xai

- https://www.etymonline.com/word/grok

- https://www.washingtonpost.com/technology/2023/10/27/elon-musk-twitter-x-anniversary/

- https://www.technologyreview.com/2018/03/27/144290/microsofts-neo-nazi-sexbot-was-a-great-lesson-for-makers-of-ai-assistants/

- https://people.eecs.berkeley.edu/~hendrycks/

- https://www.safe.ai/

- https://www.predictiveux.com/design-well-early-access